2019: Vivien Lee Hwee Sze, Roshni Jaya Sankaran, Yeo Pei Yun

2017: Rumaizah Binte Abdul Raof, Noor Ikhmah Binte Roslie, Alvina Chan, Kajol Nar Singh

1. Introduction

The existence of language presupposes a brain that allows it (Schoenemann, 2009). How our brain became adapted to language has long been debated. According to researchers, there was a co-evolution of both brain and language to allow one to adapt to the other (Deacon, 1997). But how did this happen?

We shall attempt to explore the answers to this question through this chapter – how different parts of the hominid brain evolved to provide the basic capacities for language, the factors that facilitated hominid brain growth, and how language eventually emerged amongst Homo sapiens and why. We shall also delve into the Mirror System Hypothesis, a highly contested and prominent theory, in our attempt to track the genesis of the human language.

2. How The Brain Evolved For Language

Both the brain and language share a complex and interdependent relationship. Our capacity to use language depends on the existing abilities of our brain, and similarly, our need to communicate with one another likely brought about changes in the brain to allow for the emergence of language (Schoenemann, 2009).

Different parts of our brain have changed or evolved over the course of human evolution to possibly contribute to our ability to use language, but the most notable change is the brain’s significant increase in size.

2.1 Increase in Brain Size

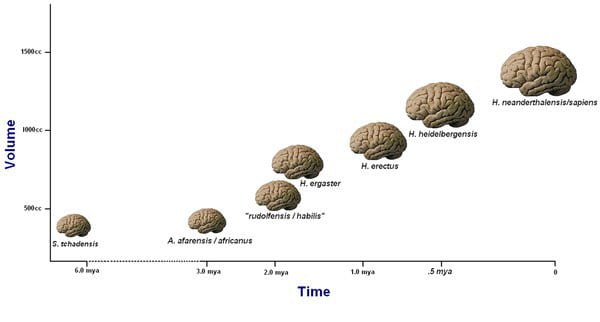

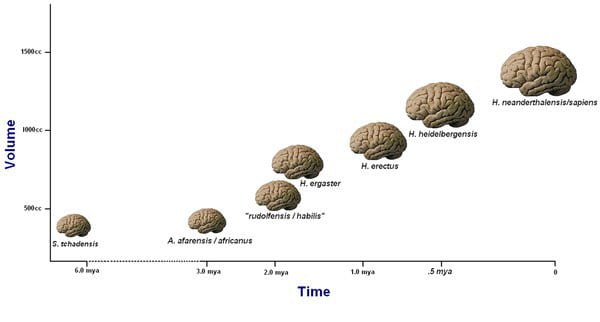

The human brain is said to have increased steadily in size from about 2 million to 300,000 years ago. It is about 5 times bigger than expected when compared with an average mammal of our body size, and it is also about three times bigger than that of the average primate of our body size (Schoenemann, 2009). These significant increases in brain size should have been functional adaptations, or they would have been selected against, as it is metabolically expensive to maintain large brains. One unit of brain tissue is said to require 22 times the amount of metabolic energy that is needed for the same unit of muscle tissue (Aiello, 1997). Larger brains are also linked with longer maturation periods in primates, which leads to fewer offspring per unit time (Schoenemann, 2009). The fact that the brain continued to increase in size despite these evolutionary costs suggests that the benefits of having a larger brain outweigh the cons.

Fig. 16.1 A visual representation of the growth of the hominid brain.

One other benefit of a larger brain is how it possibly led to a greater number of distinct cortical areas in the brain (Kaas, 2013). As a result, specific areas of the neocortex, which is a brain structure involved in higher cognitive functions such as language, became less connected to one another, which allowed those areas to carry out tasks independently of one another. This brought about functional localization, which might have contributed to the development of language areas in humans (Schoenemann, 2009).

2.2 Neocortical Evolution Of The Human Brain

The neocortex makes up the largest part of the cerebral cortex, which is the outer layer of the cerebrum. It consists of grey matter. It is considered the ‘center of extraordinary human cognitive abilities’ (Rakic, 2009) because it is responsible for a variety of higher-order brain functions such as sensory perception, cognition, generation of motor commands, spatial reasoning, and language.

Most language evolution theories have focused on the concept of neocortical evolution following the groundbreaking findings of Broca and Wernicke that demonstrated how neocortical damage led to a loss of language ability. The evolution of the neocortex in mammals is deemed fundamental in the development of higher cognitive functions of the brain.

The first step in the evolution of the human cerebral cortex was its enlargement, which occurred mainly by expansion of the surface area without a comparable increase in thickness (Rakic, 2009). This increase in neocortex size was likely influenced by increased pressures for cooperation and competition in early hominids (Sternberg & Kaufman, 2013)

2.2.1 Broca’s and Wernicke’s Areas

Broca’s area is located in the posterior-inferior frontal convexity of the neocortex, whereas Wernicke’s area is localized to the general area where parietal, occipital, and temporal lobes meet (Schoenemann, 2009). Both Broca’s and Wernicke’s areas are typically found in the left cerebral hemisphere of the brain. Damage to either of them results in either Broca’s aphasia, sometimes referred to as ‘non-fluent aphasia’, or Wernicke’s aphasia, which is a kind of ‘fluent aphasia’.

Fig.16.2 The arcuate fasciculus links the Broca’s and Wernicke’s areas directly to each other.

A tract of nerve fibers known as the arcuate fasciculus directly connects both Broca’s and Wernicke’s areas to each other, thus allowing the two areas to interact and mediate complementary aspects of language processing (Schoenemann, 2009). The arcuate fasciculus is believed to have been modified during the course of human evolution, and these modifications could have played a role in the evolution of language (Aboitiz & Garcia, 1997; Rilling et al., 2008). A comparison with the homologues of Broca’s and Wernicke’s areas in macaque monkeys’ brains led to the finding that the direct connection that links Broca’s and Wernicke’s area to each other was missing (Aboitiz & Garcia, 1997). The homologue of Wernicke’s area in macaque monkeys was found to project to prefrontal regions that are close to their homologue of Broca’s area, but not directly to it (Aboitiz & Garcia, 1997). Therefore, the arcuate fasciculus must have undergone some kind of change during human evolution to enable a more direct connection between the Broca’s and Wernicke’s areas.

Additionally, it was found that the human arcuate fasciculus projects to the temporal lobe of the brain, but this projection was much smaller or absent in nonhuman primates (Rilling et al., 2007). This suggests that new connections between the temporal lobe and Broca’s area were likely to have been established after the divergence of the human and chimpanzee lineages, and these connections subsequently linked regions that are involved in lexical-semantic and syntactic processing in the modern humans (Rilling et al., 2007).

Both Broca’s and Wernicke’s areas have also been found to be significantly larger in absolute and relative size when compared to the brains of macaque monkeys (Petrides & Pandya, 2002) though it is still not clear if the increase in size has any correlation to the evolution of language.

2.3 Non-Neocortical Evolution Of The Brain

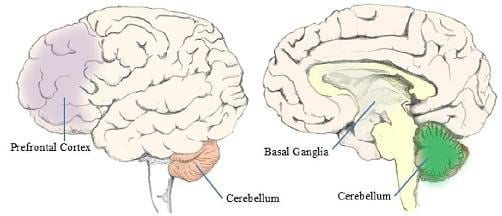

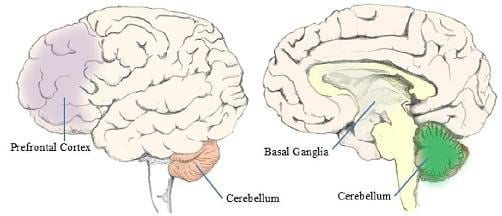

The role of non-neocortical structures in the evolution of speech has received significantly lesser attention over the years. However, non-neocortical structures such as the cerebellum and basal ganglia have also been linked with language and speech functions, for example in reading and language processing tasks (Booth, Wood, Lu, Houk & Bitan, 2007). In addition, lesions to the basal ganglia and cerebellum are known to be able to disrupt speech (Gibson, 2011).

The emergence of such studies has called for greater emphasis on the anatomy of the whole brain and its connectivity, so as to better understand the neural origins of language. The sections below describes two key non-neocortical structures of the brain, namely the cerebellum and the basal ganglia, and how they might have adapted for language.

Fig.16.3 Locations of the basal ganglia and cerebellum in the human brain.

2.3.1 Cerebellum

The cerebellum is involved in the modulation of a variety of linguistic functions such as verbal fluency, word retrieval, syntax, reading, writing (Murdoch, 2010), as well as speech perception, speech production, and semantic and grammatical processing (Ackermann, Mathiak & Riecker, 2007; De Smet, Baillieux, De Deyn, Marien & Paquier, 2007).

A recent study suggests that the cerebellum’s involvement in sensory-motor skills, imitation and production of complex action sequences may have contributed to the evolution of humans’ advanced technological capacities, such as tool using and making, which in turn may have been a preadaptation for language (Barton & Venditti, 2014).

2.3.2 Basal Ganglia

The basal ganglia is made up of a group of subcortical nuclei mainly accountable for executive functions and behaviours, emotions and motor learning (Lanciego, Luquin & Obeso, 2012). It participates in a vital circuit loop that functions in the selection and voluntary execution of movements (Bear, Connors & Paradiso., 2007). These circuits connecting the cortex and the basal ganglia have been found to aid in both language production and comprehension, since it has been found that afflicted basal ganglia results in motor problems, issues with the comprehension of syntax and the processing of semantic information.

The basal ganglia have been found to be twice as large in absolute size as predicted based on body size. This suggests that this increase in absolute size could be attributed to aiding in higher cognitive functions, such as language (Schoenemann, 2009), as one would expect it to scale closely with overall body size if it was only involved in motor functions. Additionally, biological adaptations of the basal ganglia following the advent of bipedalism could also have formed the key adaptations needed for language (Lieberman, 2003).

3. Gestures and Language

Research has found that the left hemisphere is crucial in producing both vocal and sign language as the same region and similar neural structures were activated when patients either spoke or signed (Pollick & Waal, 2007). This relation between gestures and speech are further depicted in primates where it is shown that gestures are an integral part of their communication.

Gestures can vary according to the different species of primates. For example, while a gorilla may beat its chest to show aggression, chimpanzees will organize coordinated assaults to express the same emotion. This exemplifies how gestures might lay a concrete foundation for the evolution of language.

It has been suggested how the combination of gestures with vocalizations could have created intentional vocal communication as an appendage through the process of ontogenetic ritualization. Thereafter, the brain developed to accommodate the need to produce and articulate certain phonemes. Critics of this gestural theory have pointed out that it is difficult to pinpoint the reasons as to why primates would choose to adopt this less effective mode of communication. However, since primates are shown to only have control over hand movements, it can be deduced that gestures could have very possibly acted as a precursor to the evolution of the human language.

These observations greatly support the gestural theory being the genesis of the human language, which is further supported by the distinctive enlargement of the brain and vocal apparatus. Furthermore, the presence of gestural communication in human infants prior to the development of speech, as well as the right-hand bias of both ape and human gestures (Pollick & Waal, 2007) provides support for the concurrent development of the brain structures, improved gestural communication and subsequently language capabilities.

3.1 Mirror System Hypothesis

The mirror system hypothesis is a theory which posits that language sprouted from proto-sign instead of the continuous development of vocalizations. This theory relies heavily on the concept of praxis, which is defined as the process by which a theory is enacted or realized. This hypothesis shows how praxic hand movements could have evolved into the gestures used for communication by apes, which was then passed down to and spread along the hominid line. This also implies that the mechanisms that support language in the human brain evolved from a basic mechanism that was not originally linked to communication. This indicates that the mirror system for grasping had the ability to produce and pick up on a set of actions provides the evolutionary basis for language parity. It is important to note that the mirror system hypothesis does not indicate that this is all it takes to support the evolution of language. However, it does argue for a key role in the evolution of the mechanisms that support extensions of mirror systems for both praxis and then for communication.

3.1.1 Apes and Gestures

Apes in general have a limited range of gestures that vary from each phylogeny of the species. Any new gestures that emerge are then postulated to have been created and learned. Tomasello and Call (1997) posited a process of ontogenetic ritualisation. They suggest that a dyad may acquire a new gesture. Additionally, they also offer the concept of human supported ritualisation to explain why captive apes may learn to point, even though this behaviour is not seen in the wild (Arbib, 2017).

In the case of apes, an integral aspect of the life of chimpanzees, for example, includes using a distinct type of arm raise with the intention to indicate that they are about to commence play (Goodall, 1986). Through this, we can see how an action that originally encoded other purposes, has now evolved to become a communicative signal. In some other species like orangutans, the infants suck on the mother’s lips as she is consuming food to obtain the morsels of food from her (Bard, 1990). At around 2.5 years old, they begin enacting gestures that include going towards the mother’s face to get food instead of physically touching her lips (Liebal et al., 2006).

Though ontogenetic ritualization may be the main mechanism involved in establishing a standardized signal between a pair of individuals, other mechanisms may be needed if gestures are supposed to be spread to the whole community. Some gestures are also found to be confined to a specific group of chimpanzees and are not observable in another group. This thereby rejects the theory that ontogenetic ritualization is the only procedure by which any type of gesture is acquired (Goodall, 1986). This is evident through how two orangutan communities in two different zoos have been observed to offer their arm along with morsels of food. Meanwhile, only a single group of gorillas were seen to enact gestures that included the shaking of the arms and another gesture known as the chuck up (Liebal et al., 2006). In addition to this, some other gestures such as the chimpanzees’ hand clasp is indicative of grooming. These gestures solidify the theory that social learning does play a key role in how gestures are acquired by apes (Nishida, 1980; Pika, Liebal, Call et al., 2005; Whiten et al., 2001).

Arbib (2012) postulates that in the case where a gesture could quite possibly be formed through the process of ontogenetic ritualization, each member of the group will have the ability to acquire it independently within a dyad. The moment this gesture is concretized however, it has the possibility of being spread to the rest of the group via social learning. Additionally, it can be rediscovered by dyads who then begin to enact them on their own accord through their own interactions (Arbib, 2012).

3.1.2 Broca’s Area and Mirror Neurons

According to Arbib (2017), the “F5 in the premotor cortex of the macaque that contains mirror neurons for grasping is homologous to (is the evolutionary cousin of) Broca’s area of the human brain, an area implicated in the production of signed languages as well as speech”. The F5 area of the premotor cortex controls praxic movements that occur during grasping and between the hand and mouth. This area in a monkey brain controls the hand-related motor neurons in which have an interesting characteristic that involves them being fired or triggered when the monkey not only carried out an action itself but also fired when the monkey observed a human or other monkeys carrying out a similar action. This ties in together with the hypothesis that neurons could possibly learn ‘to associate related patterns of sensory data rather than being committed to learn specifically pigeonholed categories’ (Arbib,2012).

Arbib (2012) suggests that the ‘language-ready brain’ belonging to the first homo sapiens did in fact support protosign and protospeech. He proposes two possibilities as to how this may have occurred. The first possibility is that languages could have evolved directly as speech (MacNeilage, 1998), and the second possibility is that it stemmed from sign language (Stokoe, 2001).

His approach suggests that from Homo Habilis onwards, they possessed a protolanguage that has its roots in manual gestures. This provided the structural foundation for a protolanguage that was based off vocal gestures. However, both the development of the protosign and protolanguage should be viewed as something that occurred concurrently alongside each other.

Arbib (2012) argues that once protosign had stabilised, conventionalized gestures could displace other pantomimes in terms of possessing a highly flexible semantic structure. This could allow protospeech to continuously develop, as some vocalizations began to be conventionalized as well. ‘The demands of an increasingly spoken protovocabulary might have provided the evolutionary pressure that yielded a vocal apparatus and corresponding neural control. This supported the human ability for rapid production and co-articulation of phonemes that underpins speech as we know it today. Data on hand-voice correlations in both monkey and human are adduced in support of this view’ (Arbib, 2012).

The darwinian view suggests that phonology ranked first in the skill to produce songs without any type of meaning. However, according to Jespersen (1921), various songs became connected with various social contexts and this allowed for the transformation from holophrasis to small phrases in a manner similar to biological evolution charted by the mirror system hypothesis to the cultural evolution. There were numerous mechanisms that evolved in order to be able to support protosign and had to extend collaterals with the purpose of gaining control over the vocal system. This vocal system supported an increasingly accurate control of vocalization needed to support speech. However, this proved to be solely adaptive and it could have only occurred when protosign developed over the scaffolding that pantomime provided in order to supply open-ended semantics.

3.2 Counter-Evidence To The MSH

Conversely, there has been existing literature studies arguing against Arbib’s (2012) mirror system hypothesis. Two puzzling aspects of the hypothesis were pointed out.

3.2.1 Argument 1: The mirror system is not essential for either the signed or spoken language processing.

Arbib (2012, p. 174) postulates that “the mechanisms that gets us to the role of Broca’s area in language depend in a crucial way on the mechanisms established to support a mirror system for grasping.” He argues that mirror neurons exist and they encode articulatory form of signs and words. These neurons fire whenever a sign is articulated or seen, or when a word is spoken or heard. He also asserts that mirror neurons mediates understanding as they function as a part of the neural circuitry (Arbib 2012, p. 139).

However, studies have shown that the Broca’s area does not demonstrate properties related to a mirror system for either sign or speech. Evidences suggest that the mirror system does not play a significant role in language processing for both visual-manual and auditory-vocal languages.

For speech, research studies carried out by Hickok et al. (2011) provide evidence that the Broca’s area is not critical for speech perception. For instance, patients with lesions to the fronto-parietal human mirror system (i.e. Broca’s area) performed at ceiling level during syllable discrimination and word comprehension tests. This indicates that damage in Broca’s area does not necessarily cause deficits in speech perception or comprehension. Furthermore, the degree of speech fluency does not correlate with word comprehension or syllable discrimination ability (Hickok, 2010). It was discovered that intact and precise speech perception abilities are found for 1) babies who are unable to fully control their speech articulators, 2) individuals going through the Wada test which inhibits their ability to produce speech (Kaufman & Milstein, 2013), and 3) individuals with developmental anarthria, a neurological disorder that prohibits speech production. Ergo, findings suggest that if mirror neurons for speech are present, they are insignificant for speech perception.

For sign, it is uncertain if sign-related mirror neuron populations exist. Even so, there is little evidence that they are essential in the comprehension and perception of sign language. According to Hickok at al. (1996), deaf patients who suffer injury to their Broca’s area have unimpaired sign comprehension and perception, even though they exhibit deficits in sign articulation. In a study done by Corina and Knapp (2008), it was suggested that the inferior parietal cortex, namely the supramarginal gyrus, was involved in sign comprehension and production. However, conjunction analysis conducted by the same researchers revealed overlapping activation in the superior parietal lobule instead of the inferior parietal cortex (Knapp and Corina, 2010). When the supramarginal gyrus was damaged by electrical stimulation, it was found that the ability to imitate signs remains unaffected while the ability to produce sign became disrupted (Corina et al., 1999). Therefore, findings conclude that if sign-related mirror neurons exists, they hold little significance in sign perception.

Emmorey et al. (2010) conducted an experiment on deaf signers and hearing individuals who have no knowledge of sign language. They were tasked to passively view video clips of ASL verbs (e.g. to-dance) and pantomimes (e.g. peeling an imaginary banana) while activities in their neural system were being examined. Results showed that for deaf signers, the Broca’s area were engaged during the perception of ASL verbs but not during the perception of pantomimes. Conversely for the hearing non-signers, both ASL verbs and pantomimes strongly activated the fronto-parietal cortex even though both were meaningless to them. Due to the lack of engagement with the mirror system for the deaf subjects, it was alluded that human communication does not require automatic sensorimotor resonance between action and perception.

All in all, impairments to the Broca’s region or to premotor regions does not cause deficits in comprehension and perception of signed and spoken language. A mirror neuron system does not seem to underlie language perceptions for signers and speakers.

3.2.2 Argument 2: The mirror system hypothesis is not consistent with the properties of co-sign or co-speech gesture.

Arbib (2012) argues that the “pervasiveness of co-speech gesture in modern human language users supports his hypothesis as the multimodality of modern language could be the evolutionary result of multimodal protolanguages”. The issue with that assertion is that the modern equivalent of protosigns, namely conventionalised gestures that are specific to individual cultures and more arbitrary in form such as the horns gesture or the ‘ok’ gesture, are not produced concurrently with speech (McNeill, 1992). Additionally, pantomimes do not occur simultaneously with speech, but rather, as a “component” gesture or a type of demonstration produced distinctly from speech. In a way, both modern protosigns and pantomimes repel speech in regards to both expressivity and timing.

Gesticulation plays an important role as they take place simultaneously when an individual is speaking (McNeill and Duncan, 2000). They are not the same as conventional gestures or pantomimes. It was suggested that co-speech gestures combines multiple thought elements concurrently whereas sign and speech convey thought elements individually by segmenting them into phrases, words, or morphemes. Likewise, he posits that for sign and speech meaning is categorized and combined into hierarchical structures, whereas gestures express meaning globally. For instance, the hand location, movement, or shape acquire meaning only as parts of a whole. Both speech and gestures are intertwined (Mayberry and Jaques, 2000).

However, it was unclear how or why this highly synergistic relationship between speech and gesture emerged from the expanding spiral between protospeech and protosign. It is also unclear how protolanguage (a combination of protospeech and protosign) evolved into the integrated semiotic system of gesture and speech detected in modern humans.

According to Sandler (2009), signers produce co-sign gestures with their mouth. For instance, when manually signing ‘bowling-ball’, puffed cheeks indicating the roundness is produced concurrently. Thus, presenting evidence that mouth gestures are non-combinatorics, idiosyncratic, synthetic, and global. It was concluded that “speakers gesture with their hands, signers gesture with their mouths (2009 p. 241).”

A question arises, what is the rationale for co-sign gestures during signing? A possible conclusion is that users of language convey thoughts using a system that includes synthetic, global, imagistic schemas (conveyed in gesticulations with the body, face, or hands), as well as combinatorial, codified meaning structures (demonstrated with signed or spoken language). It remains perplexing as to why modern signers produce gesticulations and signs in the same modality and how protosign evolved into gesticulation.

4. References

Aboitiz, F., & Garcıa, R. (1997). The evolutionary origin of the language areas in the human brain. A neuroanatomical perspective. Brain Research Reviews, 25(3), 381-396.

Ackermann, H., Mathiak, K., & Riecker, A. (2007). The contribution of the cerebellum to speech production and speech perception: clinical and functional imaging data. The Cerebellum, 6(3), 202-213.

Aiello, L. C. (1997). Brains and guts in human evolution: the expensive tissue hypothesis. Brazilian Journal of Genetics, 20.

Arbib, M. A. (2012). How the brain got language: The mirror system hypothesis (Vol. 16). Oxford University Press.

Arbib, M. A. (2017). Toward the language-ready brain: biological evolution and primate comparisons. Psychonomic bulletin & review, 24(1), 142-150.

Bard, K. A. (1990). Social tool use” by free-ranging orangutans: A Piagetian and developmental perspective on the manipulation of an animate object. Language” and intelligence in monkeys and apes: Comparative developmental perspectives, 356-378.

Barton, R. A., & Venditti, C. (2014). Rapid evolution of the cerebellum in humans and other great apes. Current Biology, 24(20), 2440-2444.

Bear, M. F., Connors, B. W., & Paradiso, M. A. (2007). Neuroscience: Exploring the brain (3rd ed.). Philadelphia: Lippincott Williams & Wilkins.

Booth, J. R., Wood, L., Lu, D., Houk, J. C., & Bitan, T. (2007). The role of the basal ganglia and cerebellum in language processing. Brain research, 1133, 136-144.

Corina, D. P., S. L. McBurney, C. Dodrill, K. Hinshaw, J. Brinkley & G. Ojemann. (1999). Functional roles of Broca’s area and SMG: Evidence from cortical stimulation mapping in a deaf signer. NeuroImage 10. 570–581.

Corina, D. & H. P. Knapp. (2008). Signed language and human action processing: Evidence for functional constraints on the human mirror-neuron system. Annals of the New York Academy of Sciences 1145. 100–112.

Deacon, T. W. (1997). The symbolic species: The co-evolution of language and the brain. New York: W.W. Norton.

De Smet, H. J., Baillieux, H., De Deyn, P. P., Marien, P., & Paquier, P. (2007). The cerebellum and language: the story so far. Folia Phoniatrica et Logopaedica, 59(4), 165-170.

Emmorey, K., J. Xu, P. Gannon, S. Goldin-Meadow & A. Braun. (2010). CNS activation and regional connectivity during pantomime observation: No engagement of the mirror neuron system for deaf signers. NeuroImage 49. 994–1005.

Gibson, K. R. (2013). Not the neocortex alone: other brain structures also contribute to speech and language. In The Oxford Handbook of Language Evolution.

Goodall, J. (1986). The chimpanzees of Gombe: Patterns of behavior. Massachusetts: Belknap Press.

Hickok, G. (2010). The role of mirror neurons in speech and language processing. Brain & Language 112. 1–2.

Hickok, G., M. Costanzo, R. Capasso & G. Miceli. (2011). The role of Broca’s area in speech perception: Evidence from aphasia revisited. Brain & Language 119. 214–220.

Hickok, G., M. Kritchevsky, U. Bellugi & E. S. Klima. (1996). The role of the le frontal operculum in sign language aphasia. Neurocase 2(5). 373–380.

Jespersen, O. (1921/1964). Language: Its nature, development and origin. New York: Norton.

Kaas, J. H. (2013). The evolution of brains from early mammals to humans. Wiley Interdisciplinary Reviews: Cognitive Science, 4(1), 33-45.

Kaufman, D., & Milstein, M. (2013). Learn more about Wada Test. Kaufman’s Clinical Neurology For Psychiatrists (Seventh Edition).

Knapp, H. & D. Corina. (2010). A human mirror neuron system for language: Perspectives from sign languages of the deaf. Brain & Language 112. 36–43.

Lanciego, J. L., Luquin, N., & Obeso, J. A. (2012). Functional neuroanatomy of the basal ganglia. Cold Spring Harbor Perspectives in Medicine, 2(12), 1-20.

Liebal, K., Pika, S., & Tomasello, M. (2006). Gestural communication of orangutans (Pongo pygmaeus). Gesture, 6(1), 1-38.

Lieberman, P. (2003). Motor control, speech, and the evolution of human language. Studies in the Evolution of Language, 3, 255-271.

MacNeilage, P. F. (1998). The frame/content theory of evolution of speech production. Behavioral and brain sciences, 21(4), 499-511.

Mayberry, R. I. & J. Jaques. (2000). Gesture production during stuttered speech: Insights into the nature of gesture-speech integration. In D. McNeill (ed.), Language and gesture, 199–214. Cambridge: Cambridge University Press.

McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago: University of Chicago Press.

McNeill, D. & S. Duncan. (2000). Growth points in thinking-for-speaking. In D. McNeill (ed.), Language and gesture, 141–161. Cambridge: Cambridge University Press.

Murdoch, B. E. (2010). The cerebellum and language: historical perspective and review. Cortex, 46(7), 858-868.

Nishida, T. (1980). The leaf-clipping display: a newly-discovered expressive gesture in wild chimpanzees. Journal of Human Evolution, 9(2), 117-128.

Petrides, M., & Pandya, D. N. (2002). Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. The European Journal of Neuroscience, 16(2), 291–310.

Pollick, A. S., & De Waal, F. B. (2007). Ape gestures and language evolution. Proceedings of the National Academy of Sciences, 104(19), 8184-8189.

Rakic, P. (2009). Evolution of the neocortex: a perspective from developmental biology. Nature Reviews Neuroscience, 10(10), 724.

Rilling, J. K., Glasser, M. F., Preuss, T. M., Ma, X., Zhao, T., Hu, X., & Behrens, T. E. J. (2008). The evolution of the arcuate fasciculus revealed with comparative DTI. Nature Neuroscience, 11(4), 426–428.

Sandler, W. (2009). Symbiotic symbolization by hand and mouth in sign language. Semiotica 174, 241–275.

Schoenemann, P. T. (2009). Evolution of brain and language. Language Learning, 59, 162-186.

Sternberg, R. J., & Kaufman, J. C. (2013). Social Cognition, Inhibition, and Theory of Mind: The Evolution of Human Intelligence. In The Evolution of Intelligence (pp. 37-64). Psychology Press.

Stokoe, W. C. (2001). Language in hand: Why sign came before speech. Gallaudet University Press.

Tomasello, M., & Call, J. (1997). Primate cognition. Oxford University Press, USA.